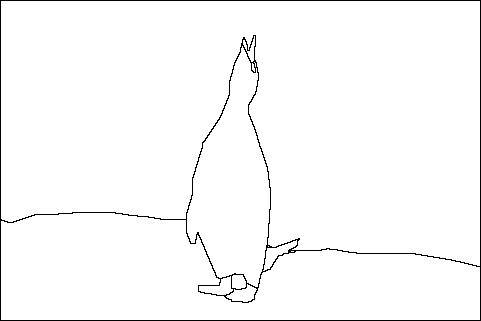

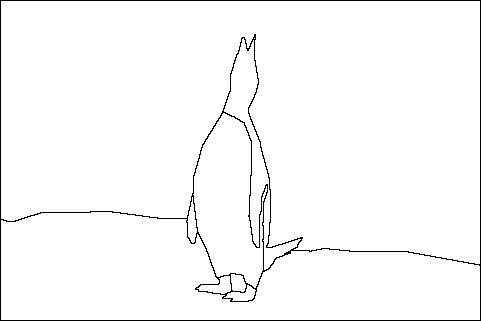

Image segmentation is a fundamental task in computer vision in which images are divided into meaningful segments. However, despite some significant advances and extensive research in this field, the selection of segmentation algorithms is still a challenging task because people incorporate some semantic considerations in their evaluations that lead to more than one acceptable solution.

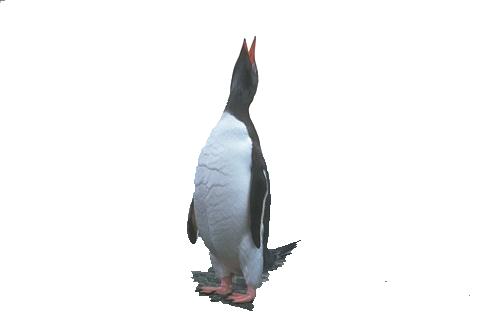

In fact, interactive image segmentation improves results by adding prior knowledge from users into the process. The main task of users is to extract semantic objects from a determined image. In general, users define the object and its background by drawing bounding boxes or seeds (also known as scribbles or brush strokes).

Although this user guidance improves segmentation results, it also makes harder to evaluate this kind of algorithms and, for this reason, some works present subjective results or use non-canonical evaluations. For example, most interactive segmentation evaluations use their own seeds, which do not allow a fair comparison of performance among segmentation algorithms.1

Some approaches have been proposed to tackle this problem, but they all present drawbacks as detailed below:

Datasets

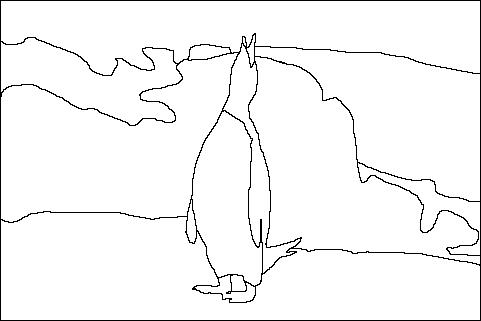

Even though the Berkeley segmentation dataset and benchmark are the extended evaluation criteria for automatic segmentation, it is not possible to identify objects and background from its ground-truth data as the dataset does not include a semantic interpretation.2 Instead, there are a couple of public datasets that facilitate comparison, but they do not provide user inputs required by the algorithms in order to get reproducible results:

-

Weizmann segmentation database: it provides a suitable single object dataset for evaluation of interactive segmentation algorithms, but it does not provide user inputs.3

-

Grabcut database: this dataset contains 50 images, ground-truth data and user specified trimaps. However, these trimaps are neither optimal nor realistic inputs because they contain richer information, such as shape, and most of the pixels are previously labeled. Moreover, it would be difficult for users to create such trimaps.4

User-experiments

In these experiments, users were shown images with descriptions of the objects they were required to extract.5 Then, users marked foreground and background pixels using a platform designed for this purpose. However, these evaluations are time-consuming because they require a constant involvement of users and do not enable repeatability of results.

Automated evaluations

Automated evaluations replace users with algorithms that emulate their behavior.6 7 These robot users emulate user interactions by generating scribbles. While robot-user evaluations measure effort by the number of required iterations for an accurate segmentation, they place the sequence of seeds according the outcome of each interaction and, therefore, generated seeds vary for different algorithms. This leads to the inability of obtaining repeatable results.

My proposed approach

In summary, these evaluation methods should have considered user intervention to allow reproduction and comparison of results for further studies. For this reason, I introduced a novel seed-based user input dataset that extends the well-known GrabCut dataset and the Geodesic Star convexity dataset. For more information, check the second note.

NOTE: This note is the first of two notes. See the presentation entry for more information.

-

B. J.Wu,Y.Zhao,J.-Y.Zhu,S.Luo,andZ.Tu.Milcut:A sweeping line multiple instance learning paradigm for interactive image segmentation. In Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on, pages 256–263. IEEE, 2014. ↩

-

P. Arbelaez, M. Maire, C. Fowlkes, and J. Malik. Contour detection and hierarchical image segmentation. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 33(5):898–916, 2011. ↩

-

S. Alpert, M. Galun, R. Basri, and A. Brandt. Image segmentation by probabilistic bottom-up aggregation and cue integration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, June 2007. ↩

-

C. Rother, V. Kolmogorov, and A. Blake. Grabcut: Interactive foreground extraction using iterated graph cuts. ACM Transactions on Graphics (TOG), 23(3):309–314, 2004. ↩

-

K. McGuinness and N. E. O’Connor. A comparative evaluation of interactive segmentation algorithms. Pattern Recognition, 43(2):434–444, 2010. ↩

-

K. McGuinness and N. E. OConnor. Toward automated evaluation of interactive segmentation. Computer Vision and Image Understanding, 115(6):868– 884, 2011. ↩

-

V. Gulshan, C. Rother, A. Criminisi, A. Blake, and A. Zisserman. Geodesic star convexity for interactive image segmentation. In Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on, pages 3129–3136. IEEE, 2010. ↩